True confession: Many decades ago, my 17-year-old self created a synthetic identity with ready-made biometric authentication…otherwise known as a fake ID. Living in Hawaii, I needed a faraway place to call my fake home, and I picked Yonkers, New York. You could call me a real-life reverse McLovin.

Get yours today! Credit: Amazon

Fast forward to now. Identification is, of course, much more easily checked – but it’s also much more easily faked. In large part that’s thanks to generative AI, which dramatically increases the scale and automation of attacks.

Crowdstrike’s Global Threat Report documents how “identity threats exploded in 2023” with a boost from genAI, and that’s not even the half of it. Read on to understand where the threat is coming from and what to do about it.

Biometrics, We Hardly Knew Ye

Many organizations have been making upgrades to strengthen their authentication capabilities, often through the application of biometrics.

But is biometric authentication the factor you think it is?

Authentication Factors: Thou Shalt Count to Three

Classically, there are three factors used to verify the authenticity of a credential; using them in combination contributes to authentication strength. (Other contextual cues in their infinite variety form a phantom fourth factor. Five is right out!)

- Something you know, like a password, is what NIST’s Digital Identity Guidelines call a “memorized secret”.

- Something you have, like your association with a particular mobile device, is what NIST refers to as an “out-of-band authenticator”.

- Something you are, like your particular face or fingerprint, is what NIST calls a “biometric characteristic”.

Two of my financial services providers just rolled out voice authentication as a new “strong” method lately, and tout not just its ease of use but also its security. We are assured, for example, that “Your voiceprint is stored securely as a mathematical equation, and only works for verification with our system.”

Now why would there be a Spinal Tap reference here? Credit: MakeAGIF

Is that really how things work?

Biometrics Are Different

The unfortunate fact is that biometrics make a better username than a password. That is, they’re pretty good at distinguishing “you” from “other people”, but limited in their ability to confirm that you are you. As NIST says:

For a variety of reasons, this document supports only limited use of biometrics for authentication.

Their reasons are important to understand:

- The nature of biometric comparison is to be probabilistic – based on statistical likelihood – rather than deterministic, such as when a system compares a presented vs. pre-registered password or device. It gets a letter grade vs. a pass/fail.

- The false match rate (FMR) and false non-match rate (FNMR) of a biometric method are critical stats — but you don’t interpret a low FMR alone as giving high authentication confidence. This rate doesn’t even account for spoofing attacks.

- Unlike with a password or device, there are few circumstances where you can properly revoke a biometric. After all, you can’t just do a “fingerprint reset” on yourself.

- The biggest distinction is that biometric characteristics aren’t secrets! Many biometrics make terrible secrets because they’re part of our exhaust data, both online and IRL. It’s a losing battle to protect something like a unique face from being seen.

- As a result, we must rely on liveness detection to ensure a binding between the presented biometric and the person. And that means we must trust a complex chain of detection sensors and processors.

The Last Decade Saw a Legit Biometrics Revolution

This isn’t to say that biometrics can’t form a crucial part of secure ecosystems.

Touch ID’s launch in 2013 started something big. Its immediate impact was to make the iPhone 5s easier to unlock. Fingerprint readers had previously required cumbersome end-user processes and erred on the side of extra-low FMRs. For the price of a few more false positives, and with a painless enrollment process built into the experience, Touch ID – and in 2017, Face ID – led to a remarkable cascade of use cases.

- Phone usage increased. In 2013, the year of Touch ID, people were checking their phones 110 times a day. By 2022, that number was up to 352 times a day, or once every three minutes on average. (You know you’ve done it!)

- Phone security increased. In 2013, 53% of phones were kept locked. By 2021, locked phones were ubiquitous at nearly 99%.

- Phones became de facto data wallets. The new mobile environment, involving a secure element, made on-device storage of personal data – including items beyond biometric templates for face and fingerprint matches – attractive.

- Phones became wallets, period. The availability of this data, and the ability to bind it to the end-user with biometrics, unleashed a flood of payment scenarios and the digital wallet era.

Mobile OS-level biometric unlocking isn’t without complications, such as reliance on a memorized secret (PIN) for confirmation and recovery. But the sheer weight of improved security and value has been impressive.

AI Cut Short the Server-Side Biometrics Revolution

Unfortunately, we’re in a dramatic new threat landscape.

I’m focusing on the “server-side” revolution because wholly digital identity scenarios – foreign travel pre-authorization, remote employee onboarding, direct-to-consumer eCommerce, gaming – have become inescapable. And they rely more and more on server-side biometrics for identity verification and authentication. These scenarios are now at extra risk when they use these biometric checks without a trusted device as another factor.

The generative AI revolution took mere weeks to explode from a bubbling low-level concern into a bona fide threat. November 2022 saw the launch of ChatGPT’s API, DALLE-2, and Whisper. Just two months later, organizations experienced disturbingly widespread AI-boosted identity fraud: In a survey concluding in January 2023, Regula Forensics found that 29% of organizations were being targeted by video deepfakes and 37% by voice deepfakes.

By December 2023, iProov’s Threat Intelligence Report revealed that face swaps increased 704% from the first half of the year to the second. They warned that “Face swaps are now firmly established as the deepfake of choice among persistent threat actors,” and that nearly half of the threat groups they’re tracking were newly created.

The report shares an up-to-date example of face swap technology (see the second listed video) that provokes amazement among viewers but is becoming routine among threat actors.

Creepy. Credit: iProov

AI technology can be used cleverly for good even in the deepfake realm – check out HYPR’s Bojan Simic “speaking” in Japanese — but even the creators of the base technologies are spooking themselves about the consequences. OpenAI has stated its Voice Engine should be held back from general release because it’s so good that it’s certain to be misused.

A Brief Tour Through the Consequences

The monetary costs of this new landscape are shocking – but we should also recognize the societal costs of these very personal forms of attack.

Classic Cyber

The most obvious consequence is classic cyber risk and fraud. Most of the attacks are intended for financial gain. A dramatic example came in early February 2024 when a Hong Kong finance professional experienced a unique form of spear phishing: a faked-up request to transfer HK$200 million, supported by an entire cast of senior exec characters deepfaked in the context of video conference calls.

Political

Nonmonetary but serious motivations include disrupting the political landscape. A voice deepfake, not the real President Joe Biden, was behind a series of robocalls in January 2024 urging New Hampshirites not to vote in their state’s primary election. Granite Staters who remembered a similar controversy from mid-2022 may have been extra confused because the earlier instance was a false alarm.

Cultural

The constant uncertainty about what’s real affects not just famous people but all individuals.

Every 100% digital interaction without a definitive authentication method now has question marks around it. We could call this the Voight-Kampff challenge, after the test in the Blade Runner movie (and source novel) to root out non-humans that relied on micro-expressions, bodily functions, and expressions of empathy. To the question “How can people not tell this is AI?” posted in March 2024, one Redditor said:

“Seeing AI pictures, reading AI generated text, I’m starting to feel like Rick Deckard. I’m no longer able to trust anything I see or even ‘people’ I talk to through chat OR voice. I’m giving everyone and everything around me the Turing Test without even realizing it.”

Another fake ID you can go and buy. Credit: eBay

Check out the TRUE project, which is taking this societal risk very seriously indeed.

Doing Battle Against These Risks

What are our best options to mitigate and prevent these risks, when AI is powering high-scale attacks and elaborate spear-phishing episodes alike?

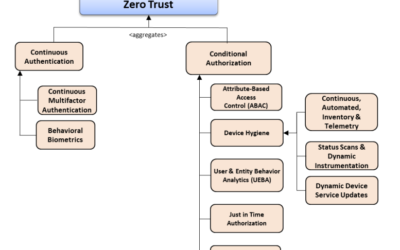

Some options count as obvious CISOcraft (or are perhaps emerging as CIDOcraft). A Zero-Trust mindset and a commitment to layering signals and decision-making actions still go a long way. A LastPass employee recently batted away a social engineering attack that had a deepfake element but smelled wrong for traditional reasons. And successful attacks like these stolen ChatGPT credentials are AI-adjacent but not necessarily a consequence of the AI era.

Here are additional recommendations for battling these dramatic new threats.

Respect the New Arms Race We’re In

Now is the time to develop a deep appreciation for the ways biometrics are different and the ways AI is rapidly creating unknowns. Stay attuned to NIST’s Digital Identity Guidelines in these areas, and stay alert for the forthcoming final fourth revision. As well, check out the work of the Kantara Deepfakes group.

The Liveness.com site reminds us that two-dimensional liveness checking isn’t all it’s cracked up to be, yet. So, increase your awareness of the state of the art in liveness detection and participate in the security community surrounding it. If you’ve got a great solution, consider taking part in Face 2024 and other competitions. The FTC’s recent voice cloning challenge produced heartening results.

Pair Biometrics With Other Authentication Tricks

Passwordless experiences that get still-extant passwords off the wire are still better than password-bearing interactions. Much like Touch ID, they have the potential to reduce ecosystem-wide risk. So, accelerate your plans to move to phishing-resistant authentication methods. Liminal research says 48% of practitioners who are planning to adopt passwordless solutions in the next two years prefer biometric authentication.

FIDO multi-device credentials aren’t perfect, but they hit a new sweet spot for security, privacy, user choice, and user experience. So, use passkeys where possible. They typically leverage biometrics in proper fashion, binding the user to the channel used.

Get Fine-Grained About Verifying Identity Data

If you want to be sure you’re not looking at a “swapped face”, add more identity verification signals to your registration and authentication user journeys. The trick is to scope those signals to individual pieces of data, make them more privacy sensitive, and reduce their invasion into the user experience. An example is privacy-sensitive age estimation, a new biometric technique for responding to the age-appropriate design codes and website age-gating mandates sprouting up.

The emerging verifiable credentials era presents an intriguing opportunity to start trading in verified-identity “small data”. If you could ask for and receive individual verified identity signals from a user’s wallet — with biometric and binding assurances about the quality of those signals — what would you ask for?

Finally: Be wary of simply participating in a classical security arms race as your only strategy. Bots battling bots for inches of fraud detection ground won’t get us where we need to go. Incremental gains in liveness detection will be swamped by AI’s endless invention. We’re in the biometrics singularity now — so we need to innovate more than ever before.

Eve is a globally recognized pioneer in identity and access management and standards. Her roots are in semi-structured data modeling and the API economy and include a passion for fostering successful ecosystems and individual empowerment. At Venn Factory she drives identity, security, and privacy success in the connected world by bridging the gap between technical intricacies and strategic business outcomes.

Eve’s leadership on pivotal protocols such as XML, SAML, UMA, and HEART as well as industry efforts like UK Open Banking, US government health IT, and the medical Internet of Things demonstrate her unwavering commitment to innovation.

As CTO of ForgeRock, Eve oversaw emerging technology R&D, evangelism, and innovation culture, and empowered her team and cross-functional colleagues to deliver results to dozens of Global 5000 customers, partners, analysts, publications, and events. She previously served as a Forrester Research security and risk analyst covering IAM, strong authentication, and API security.

Thanks for reading! Contact Eve for an expanded version of this article.